Nous reproduisons ci-dessous un point de vue de Tomislav Sunic, cueilli sur le site Nous sommes partout et consacré à la notion de décadence. Ancien professeur de sciences politiques aux États-Unis et ancien diplomate croate, Tomislav Sunic a publié trois essais en France, Homo americanus (Akribéia, 2010) et La Croatie: un pays par défaut ? (Avatar Editions, 2010) ainsi qu'un recueil de textes et d'entretiens, Chroniques des Temps postmodernes (Avatar, 2014).

A quand la décadence finale ? De Salluste et Juvénal à nos jours

Les Anciens, c’est à dire nos ancêtres greco-germano-gallo-slavo-illyro-romains, étaient bien conscients des causes héréditaires de la décadence quoiqu’ils attribuassent à cette notion des noms fort variés. La notion de décadence, ainsi que sa réalité existent depuis toujours alors que sa dénomination actuelle ne s’implante solidement dans la langue française qu’au XVIIIème siècle, dans les écrits de Montesquieu. Plus tard, vers la fin du XIXème siècle, les poètes dits « décadents », en France, étaient même bien vus et bien lus dans les milieux littéraires traditionalistes, ceux que l’on désigne aujourd’hui, de façon commode, comme les milieux « d’extrême droite ». Par la suite, ces poètes et écrivains décadents du XIXème siècle nous ont beaucoup marqués, malgré leurs mœurs souvent débridées, métissées, alcoolisées et narcotisées, c’est-à-dire malgré leur train de vie décadent.

En Allemagne, vers la fin du XIXème siècle et au début du XXème siècle, bien que moins régulièrement qu’en France, le terme « Dekadenz » était également en usage dans la prose des écrivains réactionnaires et conservateurs qu’effrayaient le climat de déchéance morale et la corruption capitaliste dans la vie culturelle et politique de leur pays. Il faut souligner néanmoins que le mot allemand « Dekadenz », qui est de provenance française, a une signification différente dans la langue allemande, langue qui préfère utiliser son propre trésor lexical et dont, par conséquent, les signifiants correspondent souvent à un autre signification. Le bon équivalent conceptuel, en allemand, du mot français décadence serait le très unique terme allemand « Entartung », terme qui se traduit en français et en anglais par le lourd terme d’essence biologique de « dégénérescence » et « degeneracy », termes qui ne correspondent pas tout à fait à la notion originale d’ « Entartung » en langue allemande. Le terme allemand « Entartung », dont l’étymologie et le sens furent à l’origine neutres, désigne le procès de dé-naturalisation, ce qui n’a pas forcément partie liée à la dégénérescence biologique. Ce mot allemand, vu son usage fréquent sous le Troisième Reich devait subir, suite à la fin de la Deuxième Guerre mondiale et suite à la propagande alliée anti-allemande, un glissement sémantique très négatif de sorte qu’on ne l’utilise plus dans le monde de la culture et de la politique de l’Allemagne contemporaine.

En Europe orientale et communiste, durant la Guerre froide, le terme de décadence n’a presque jamais été utilisé d’une façon positive. À sa place, les commissaires communistes fustigeaient les mœurs capitalistes des Occidentaux en utilisant le terme révolutionnaire et passe-partout, notamment le terme devenu péjoratif (dans le lexique communiste) de « bourgeois ». En résumé, on peut conclure que les usagers les plus réguliers du terme « décadence » ainsi que ses plus farouches critiques sont les écrivains classés à droite ou à l’extrême droite.

On doit ici soulever trois questions essentielles. Quand la décadence se manifeste-t-elle, quelles sont ses origines et comment se termine-t-elle ? Une foule d’écrivains prémodernes et postmodernes, de J.B. Bossuet à Emile Cioran, chacun à sa façon et chacun en recourant à son propre langage, nous ont fourni des récits apocalyptiques sur la décadence qui nous conduit à son tour vers la fin du monde européen. Or force est de constater que l’Europe se porte toujours bel et bien malgré plusieurs décadences déjà subies à partir de la décadence de l’ancienne Rome jusqu’à celle de nos jours. À moins que nous ne soyons, cette fois-ci, voués – compte tenu du remplacement des peuples européens par des masses de peuplades non-européennes – non plus à la fin d’UNE décadence mais à LA décadence finale de notre monde européen tout court.

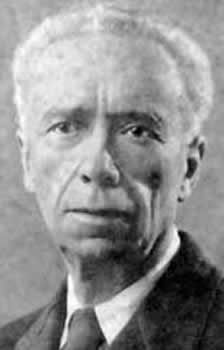

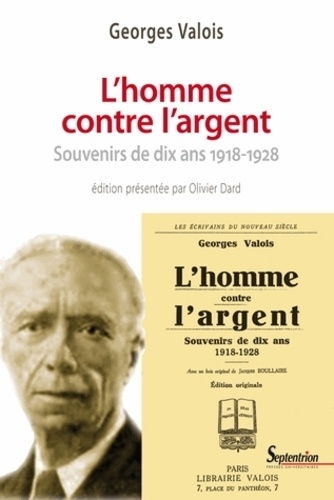

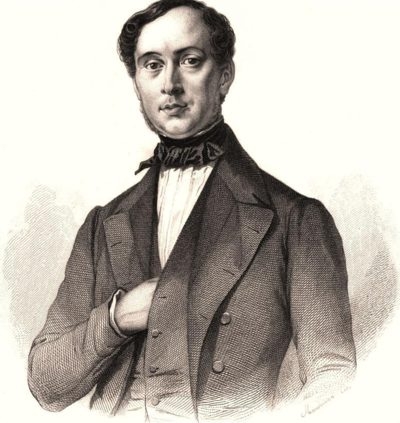

Avant que l’on commence à se lamenter sur les décadences décrites par nos ancêtres romains et jusque par nos auteurs contemporains, et quelle que soit l’appellation qui leur fut attribuée par les critiques modernes, « nationalistes », « identitaires », « traditionalistes de la droite alternative, » « de la droite extrême » et j’en passe, il est essentiel de mentionner deux écrivains modernes qui signalèrent l’arrivée de la décadence bien que leur approche respective de son contenu et de ses causes fut très divergente. Ce sont l’Allemand Oswald Spengler avec son Déclin de l’Occident, écrit au début du XXème siècle, et le Français Arthur de Gobineau avec son gros ouvrage Essai sur l’inégalité des races humaines, écrit soixante ans plut tôt. Tous deux étaient des écrivains d’une grande culture, tous deux partageaient la même vision apocalyptique de l’Europe à venir, tous deux peuvent être appelés des pessimistes culturels avec un sens du tragique fort raffiné. Or pour le premier de ces auteurs, Spengler, la décadence est le résultat du vieillissement biologique naturel de chaque peuple sur terre, vieillissement qui l’amène à un moment historique à sa mort inévitable. Pour le second, Gobineau, la décadence est due à l’affaiblissement de la conscience raciale qui fait qu’un peuple adopte le faux altruisme tout en ouvrant les portes de la cité aux anciens ennemis, c’est-à-dire aux Autres d’une d’autre race, ce qui le conduit peu à peu à s’adonner au métissage et finalement à accepter sa propre mort. À l’instar de Gobineau, des observations à peu près similaires seront faites par des savants allemands entre les deux guerres. On doit pourtant faire ici une nette distinction entre les causes et les effets de la décadence. Le tedium vitae (fatigue de vivre), la corruption des mœurs, la débauche, l’avarice, ne sont que les effets de la disparition de la conscience raciale et non sa cause. Le mélange des races et le métissage, termes mal vus aujourd’hui par le Système et ses serviteurs, étaient désignés par Gobineau par le terme de « dégénérescence ». Selon lui, celle-ci fonctionne dorénavant, comme une machine à broyer le patrimoine génétique des peuples européens. Voici une courte citation de son livre : « Je pense donc que le mot dégénéré, s’appliquant à un peuple, doit signifier et signifie que ce peuple n’a plus la valeur intrinsèque qu’autrefois il possédait, parce qu’il n’a plus dans ses veines le même sang, dont des alliages successifs ont graduellement modifié la valeur ; autrement dit, qu’avec le même nom, il n’a pas conservé la même race que ses fondateurs ; enfin, que l’homme de la décadence, celui qu’on appelle l’homme dégénéré, est un produit différent, au point de vue ethnique, du héros des grandes époques. »

Avant que l’on commence à se lamenter sur les décadences décrites par nos ancêtres romains et jusque par nos auteurs contemporains, et quelle que soit l’appellation qui leur fut attribuée par les critiques modernes, « nationalistes », « identitaires », « traditionalistes de la droite alternative, » « de la droite extrême » et j’en passe, il est essentiel de mentionner deux écrivains modernes qui signalèrent l’arrivée de la décadence bien que leur approche respective de son contenu et de ses causes fut très divergente. Ce sont l’Allemand Oswald Spengler avec son Déclin de l’Occident, écrit au début du XXème siècle, et le Français Arthur de Gobineau avec son gros ouvrage Essai sur l’inégalité des races humaines, écrit soixante ans plut tôt. Tous deux étaient des écrivains d’une grande culture, tous deux partageaient la même vision apocalyptique de l’Europe à venir, tous deux peuvent être appelés des pessimistes culturels avec un sens du tragique fort raffiné. Or pour le premier de ces auteurs, Spengler, la décadence est le résultat du vieillissement biologique naturel de chaque peuple sur terre, vieillissement qui l’amène à un moment historique à sa mort inévitable. Pour le second, Gobineau, la décadence est due à l’affaiblissement de la conscience raciale qui fait qu’un peuple adopte le faux altruisme tout en ouvrant les portes de la cité aux anciens ennemis, c’est-à-dire aux Autres d’une d’autre race, ce qui le conduit peu à peu à s’adonner au métissage et finalement à accepter sa propre mort. À l’instar de Gobineau, des observations à peu près similaires seront faites par des savants allemands entre les deux guerres. On doit pourtant faire ici une nette distinction entre les causes et les effets de la décadence. Le tedium vitae (fatigue de vivre), la corruption des mœurs, la débauche, l’avarice, ne sont que les effets de la disparition de la conscience raciale et non sa cause. Le mélange des races et le métissage, termes mal vus aujourd’hui par le Système et ses serviteurs, étaient désignés par Gobineau par le terme de « dégénérescence ». Selon lui, celle-ci fonctionne dorénavant, comme une machine à broyer le patrimoine génétique des peuples européens. Voici une courte citation de son livre : « Je pense donc que le mot dégénéré, s’appliquant à un peuple, doit signifier et signifie que ce peuple n’a plus la valeur intrinsèque qu’autrefois il possédait, parce qu’il n’a plus dans ses veines le même sang, dont des alliages successifs ont graduellement modifié la valeur ; autrement dit, qu’avec le même nom, il n’a pas conservé la même race que ses fondateurs ; enfin, que l’homme de la décadence, celui qu’on appelle l’homme dégénéré, est un produit différent, au point de vue ethnique, du héros des grandes époques. »

Et plus tard, Gobineau nous résume peut-être en une seule phrase l’intégralité de son œuvre : « Pour tout dire et sans rien outrer, presque tout ce que la Rome impériale connut de bien sortit d’une source germanique ».

Ce qui saute aux yeux, c’est que soixante ans plus tard, c’est-à-dire au début du XXème siècle, l’Allemand Oswald Spengler, connu comme grand théoricien de la décadence, ne cite nulle part dans son œuvre le nom d’Arthur de Gobineau, malgré de nombreuses citations sur la décadence empruntées à d’autres auteurs français.

Nous allons poursuivre nos propos théoriques sur les causes du déclin de la conscience raciale et qui à son tour donne lieu au métissage en tant que nouveau mode de vie. Avant cela, il nous faut nous pencher sur la notion de décadence chez les écrivains romains Salluste et Juvénal et voir quel fut d’après eux le contexte social menant à la décadence dans l’ancienne Rome.

L’écrivain Salluste est important à plusieurs titres. Primo, il fut le contemporain de la conjuration de Catilina, un noble romain ambitieux qui avec nombre de ses consorts de la noblesse décadente de Rome faillit renverser la république romaine et imposer la dictature. Salluste fut partisan de Jules César qui était devenu le dictateur auto-proclamé de Rome suite aux interminables guerres civiles qui avaient appauvri le fonds génétique de nombreux patriciens romains à Rome.

L’écrivain Salluste est important à plusieurs titres. Primo, il fut le contemporain de la conjuration de Catilina, un noble romain ambitieux qui avec nombre de ses consorts de la noblesse décadente de Rome faillit renverser la république romaine et imposer la dictature. Salluste fut partisan de Jules César qui était devenu le dictateur auto-proclamé de Rome suite aux interminables guerres civiles qui avaient appauvri le fonds génétique de nombreux patriciens romains à Rome.

Par ailleurs Salluste nous laisse des pages précieuses sur une notion du politique fort importante qu’il appelle « metus hostilis » ou « crainte de l’ennemi », notion qui constituait chez les Romains, au cours des guerres contre les Gaulois et Carthaginois au siècle précèdent, la base principale de leur race, de leur vertu, de leur virilité, avec une solide conscience de leur lignage ancestral. Or après s’être débarrassé militairement de « metus Punici » (NDLR: crainte du Cathaginois) et de « metus Gallici» (NDLR: crainte du Gaulois), à savoir après avoir écarté tout danger d’invasion extérieure, les Romains, au milieu du IIème siècle avant notre ère, ont vite oublié le pouvoir unificateur et communautaire inspiré par « metus hostilis » ou la « crainte de l’Autre » ce qui s’est vite traduit par la perte de leur mémoire collective et par un goût prononcé pour le métissage avec l’Autre des races non-européennes.

Voici une courte citation de Salluste dans son ouvrage, Catilina, Chapitre 10.

« Ces mêmes hommes qui avaient aisément supporté les fatigues, les dangers, les incertitudes, les difficultés, sentirent le poids et la fatigue du repos et de la richesse… L’avidité ruina la bonne foi, la probité, toutes les vertus qu’on désapprit pour les remplacer par l’orgueil, la cruauté, l’impiété, la vénalité. ».

Crainte de l’autre

La crainte de l’ennemi, la crainte de l’Autre, notion utilisée par Salluste, fut aux XIXème et XXème siècles beaucoup discutée par les historiens, politologues et sociologues européens. Cette notion, lancée par Salluste, peut nous aider aujourd’hui à saisir le mental des migrants non-européens qui s’amassent en Europe ainsi que le mental de nos politiciens qui les y invitent. Certes, la crainte de l’Autre peut être le facteur fortifiant de l’identité raciale chez les Européens de souche. Nous en sommes témoins aujourd’hui en observant la renaissance de différents groupes blancs et identitaires en Europe. En revanche, à un moment donné, le metus hostilis, à savoir la crainte des Autres, risque de se transformer en son contraire, à savoir l’amor hostilis, ou l’amour de l’ennemi qui détruit l’identité raciale et culturelle d’un peuple. Ainsi les Occidentaux de souche aujourd’hui risquent-ils de devenir peu à peu victimes du nouveau paysage multiracial où ils sont nés et où ils vivent. Pire, peu à peu ils commencent à s’habituer à la nouvelle composition raciale et finissent même par l’intérioriser comme un fait naturel. Ces mêmes Européens, seulement quelques décennies auparavant, auraient considéré l’idée d’un pareil changement racial et leur altruisme débridé comme surréel et morbide, digne d’être combattu par tous les moyens.

Nul doute que la crainte de l’Autre, qu’elle soit réelle ou factice, resserre les rangs d’un peuple, tout en fortifiant son homogénéité raciale et son identité culturelle. En revanche, il y a un effet négatif de la crainte des autres que l’on pouvait observer dans la Rome impériale et qu’on lit dans les écrits de Juvénal. Le sommet de l’amour des autres, ( l’ amor hostilis) ne se verra que vers la fin du XXème siècle en Europe multiculturelle. Suite à l’opulence matérielle et à la dictature du bien-être, accompagnées par la croyance à la fin de l’histoire véhiculée par les dogmes égalitaristes, on commence en Europe, peu à peu, à s’adapter aux mœurs et aux habitudes des Autres. Autrefois c’étaient Phéniciens, Juifs, Berbères, Numides, Parthes et Maghrébins et autres, combattus à l’époque romaine comme des ennemis héréditaires. Aujourd’hui, face aux nouveaux migrants non-européens, l’ancienne peur de l’Autre se manifeste chez les Blancs européens dans le mimétisme de l’altérité négative qui aboutit en règle générale à l’apprentissage du « déni de soi ». Ce déni de soi, on l’observe aujourd’hui dans la classe politique européenne et américaine à la recherche d’un ersatz pour son identité raciale blanche qui est aujourd’hui mal vue. A titre d’exemple cette nouvelle identité négative qu’on observe chez les gouvernants occidentaux modernes se manifeste par un dédoublement imitatif des mœurs des immigrés afro-asiatiques. On est également témoin de l’apprentissage de l’identité négative chez beaucoup de jeunes Blancs en train de mimer différents cultes non-européens. De plus, le renversement de la notion de « metus hostilis » en « amor hostilis » par les gouvernants européens actuels aboutit fatalement à la culture de la pénitence politique. Cette manie nationale-masochiste est surtout visible chez les actuels dirigeants allemands qui se lancent dans de grandes embrassades névrotiques avec des ressortissants afro-asiatiques et musulmans contre lesquels ils avaient mené des guerres meurtrières du VIIIe siècle dans l’Ouest européen et jusqu’au XVIIIe siècle dans l’Est européen.

Nul doute que la crainte de l’Autre, qu’elle soit réelle ou factice, resserre les rangs d’un peuple, tout en fortifiant son homogénéité raciale et son identité culturelle. En revanche, il y a un effet négatif de la crainte des autres que l’on pouvait observer dans la Rome impériale et qu’on lit dans les écrits de Juvénal. Le sommet de l’amour des autres, ( l’ amor hostilis) ne se verra que vers la fin du XXème siècle en Europe multiculturelle. Suite à l’opulence matérielle et à la dictature du bien-être, accompagnées par la croyance à la fin de l’histoire véhiculée par les dogmes égalitaristes, on commence en Europe, peu à peu, à s’adapter aux mœurs et aux habitudes des Autres. Autrefois c’étaient Phéniciens, Juifs, Berbères, Numides, Parthes et Maghrébins et autres, combattus à l’époque romaine comme des ennemis héréditaires. Aujourd’hui, face aux nouveaux migrants non-européens, l’ancienne peur de l’Autre se manifeste chez les Blancs européens dans le mimétisme de l’altérité négative qui aboutit en règle générale à l’apprentissage du « déni de soi ». Ce déni de soi, on l’observe aujourd’hui dans la classe politique européenne et américaine à la recherche d’un ersatz pour son identité raciale blanche qui est aujourd’hui mal vue. A titre d’exemple cette nouvelle identité négative qu’on observe chez les gouvernants occidentaux modernes se manifeste par un dédoublement imitatif des mœurs des immigrés afro-asiatiques. On est également témoin de l’apprentissage de l’identité négative chez beaucoup de jeunes Blancs en train de mimer différents cultes non-européens. De plus, le renversement de la notion de « metus hostilis » en « amor hostilis » par les gouvernants européens actuels aboutit fatalement à la culture de la pénitence politique. Cette manie nationale-masochiste est surtout visible chez les actuels dirigeants allemands qui se lancent dans de grandes embrassades névrotiques avec des ressortissants afro-asiatiques et musulmans contre lesquels ils avaient mené des guerres meurtrières du VIIIe siècle dans l’Ouest européen et jusqu’au XVIIIe siècle dans l’Est européen.

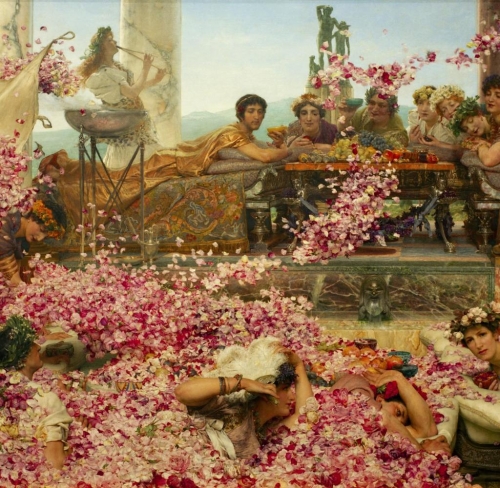

L’engouement pour l’Autre extra-européen – dont l’image est embellie par les médias et cinémas contemporains – était déjà répandu chez les patriciens romains décadents au Ier siècle et fut décrit par le satiriste Juvénal. Dans sa IIIème satire, intitulée Les Embarras de Rome (Urbis incommoda), Juvénal décrit la Rome multiculturelle et multiraciale où pour un esprit raffiné comme le sien il était impossible de vivre…

« Dans ces flots d’étrangers et pourtant comme rien

Depuis longtemps déjà l’Oronte syrien

Coule au Tibre, et transmet à Rome ses coutumes,

Sa langue, ses chanteurs aux bizarres costumes… »

Juvénal se plaint également des migrants juifs dans ses satires, ce qui lui a valu d’être taxé d’antisémitisme par quelques auteurs contemporains…

« Maintenant la forêt et le temple et la source

Sont loués à des Juifs, qui, pour toute ressource,

Ont leur manne d’osier et leur foin de rebut.

Là, chaque arbre est contraint de payer son tribut;

On a chassé la muse, ô Rome abâtardie

Et l’auguste forêt tout entière mendie.«

Les lignes de Juvénal sont écrites en hexamètres dactyliques ce qui veut dire en gros un usage d’échanges rythmiques entre syllabes brèves ou longues qui fournissent à chacune de ses satires une tonalité dramatique et théâtrale qui était très à la mode chez les Anciens y compris chez Homère dans ses épopées. À l’hexamètre latin, le traducteur français a substitué les mètres syllabiques rimés qui ont fort bien capturé le sarcasme désabusé de l’original de Juvénal. On est tenté de qualifier Juvénal de Louis Ferdinand Céline de l‘Antiquité. Dans sa fameuse VIème satire, qui s’intitule Les Femmes, Juvénal décrit la prolifération de charlatans venus à Rome d’Asie et d’Orient et qui introduisent dans les mœurs romaines la mode de la zoophilie et de la pédophilie et d’autres vices. Le langage de Juvénal décrivant les perversions sexuelles importées à Rome par des nouveaux venues asiatiques et africains ferait même honte aux producteurs d’Hollywood aujourd’hui. Voici quelques-uns de ses vers traduits en français, de manière soignés car destinés aujourd’hui au grand public :

Les lignes de Juvénal sont écrites en hexamètres dactyliques ce qui veut dire en gros un usage d’échanges rythmiques entre syllabes brèves ou longues qui fournissent à chacune de ses satires une tonalité dramatique et théâtrale qui était très à la mode chez les Anciens y compris chez Homère dans ses épopées. À l’hexamètre latin, le traducteur français a substitué les mètres syllabiques rimés qui ont fort bien capturé le sarcasme désabusé de l’original de Juvénal. On est tenté de qualifier Juvénal de Louis Ferdinand Céline de l‘Antiquité. Dans sa fameuse VIème satire, qui s’intitule Les Femmes, Juvénal décrit la prolifération de charlatans venus à Rome d’Asie et d’Orient et qui introduisent dans les mœurs romaines la mode de la zoophilie et de la pédophilie et d’autres vices. Le langage de Juvénal décrivant les perversions sexuelles importées à Rome par des nouveaux venues asiatiques et africains ferait même honte aux producteurs d’Hollywood aujourd’hui. Voici quelques-uns de ses vers traduits en français, de manière soignés car destinés aujourd’hui au grand public :

« Car, intrépide enfin, si ton épouse tendre

Voulait sentir son flanc s’élargir et se tendre

Sous le fruit tressaillant d’un adultère amour,

Peut-être un Africain serait ton fils un jour. »

Les Romains utilisaient le mot « Aethiopis », Ethiopiens pour désigner les Noirs d’Afrique.

Qui interprète l’interprète ?

L’interprétation de chaque ouvrage par n’importe quel auteur, sur n’importe quel sujet social et à n’importe quelle époque, y compris les vers de l’écrivain latin Juvénal, se fera en fonction des idées politiques dominantes à savoir du Zeitgeist régnant. Or qui va contrôler l’interprète aujourd’hui si on est obligé de suivre les oukases pédagogiques de ses chefs mis en place après la fin de la Deuxième Guerre mondiale ? À cet effet on peut citer Juvénal et les fameux vers de sa VIème satire : « Quis custodet ipsos custodes » à savoir qui va garder les gardiens, c’est à dire qui va contrôler nos architectes de la pensée unique qui sévissent dans les universités et dans les médias ?

A peu près le même principe de censure et d’autocensure règne aujourd’hui au sujet de l’étude et la recherche sur les différentes races. Aujourd’hui, vu le dogme libéralo-communiste du progrès et la conviction que les races ne sont qu’une construction sociale et non un fait biologique et en raison du climat d’auto-censure qui sévit dans la haute éducation et dans les médias, il n’est pas surprenant que des savants qui analysent les différences entre races humaines soient souvent accusés d’utiliser des prétendus « stéréotypes ethniques ». Or le vocable « stéréotype » est devenu aujourd’hui un mot d’ordre chez les bien-pensants et chez les hygiénistes de la parole en Europe. La même procédure d’hygiénisme lexical a lieu lorsqu’un biologiste tente d’expliquer le rôle des différents génomes au sein des différentes races. Un savant généticien, s’il s’aventure à démystifier les idées égalitaires sur la race et l’hérédité risque d’être démonisé comme raciste, fasciste, xénophobe ou suprémaciste blanc. La nouvelle langue de bois utilisée par les médias contre les mal-pensants se propage dans toutes les chancelleries et toutes les universités européennes.

Certes, les idées, en l’occurrence de mauvaises idées, mènent le monde, et non l’inverse. Dans la même veine, les idées dominantes qui sont à la base du Système d’aujourd’hui décident de l’interprétation des découvertes dans les sciences biologiques et non l’inverse. Nous avons récemment vu la chasse aux sorcières dont fut victime le Prix Nobel James Watson, codécouvreur de la structure de l’ADN et du décryptage du génome humain. Il a été attaqué par les grand médias pour des propos prétendument racistes émis il y a une dizaine d’année à propos des Africains. Je le cite : « Même si j’aimerais croire que tous les êtres humains sont dotés d’une intelligence égale, ceux qui ont affaire à des employés noirs ne pensent pas la même chose» . Ce que Watson a dit est partagé par des milliers de biologistes et généticiens mais pour des raisons que nous avons déjà mentionnées, ils se taisent.

Nos Anciens possédaient un sens très aigu de leur héritage et de leur race qu’ils appelaient genus. Il existe une montagne d’ouvrages qui traitent de la forte conscience de la parenté commune et du lignage commun chez les Anciens. Nous n’allons pas citer tous les innombrables auteurs, notamment les savants allemands de la première moitié du XXème siècle qui ont écrit un tas de livres sur la dégénérescence raciale des Romains et d’autres peuples européens et dont les ouvrages sont non seulement mal vus mais également mal connus par le grand public d’aujourd’hui. Il est à noter qu’avant la Deuxième Guerre mondiale et même un peu plus tard, les savants et les historiens d’Europe et d’Amérique se penchaient sur le facteur racial beaucoup plus souvent et plus librement qu’aujourd’hui.

Il va de soi que les anciens Romains ignoraient les lois mendéliennes de l’hérédité ainsi que les complexités du fonctionnement de l’ADN, mais ils savaient fort bien comment distinguer un barbare venu d’Europe du nord d’un barbare venu d’Afrique. Certains esclaves étaient fort prisés, tels les Germains qui servaient même de garde de corps auprès des empereurs romains. En revanche, certains esclaves venues d’Asie mineure et d’Afrique, étaient mal vus et faisaient l’objet de blagues et de dérisions populaires.

Voici une brève citation de l’historien américain Tenney Frank, tirée de son livre Race Mixture in the Roman Empire ( Mélange des races dans l’Empire de Rome), qui illustre bien ce que les Romains pensaient d’eux-mêmes et des autres. Au début du XXème siècle Frank était souvent cité par les latinistes et il était considéré comme une autorité au sujet de la composition ethnique de l’ancienne Rome. Dans son essai, il opère une classification par races des habitants de l’ancienne Rome suite à ses recherches sur les inscriptions sépulcrales effectuées pendant son séjour à Rome. Voici une petite traduction en français de l’un de ces passages : « .…de loin le plus grand nombre d’esclaves venait de l’Orient, notamment de la Syrie et des provinces de l’Asie Mineure, avec certains venant d’Égypte et d’Afrique (qui, en raison de la classification raciale peuvent être considérés comme venant de l’Orient). Certains venaient d’Espagne et de Gaule, mais une proportion considérable d’entre eux étaient originaires de l’Est. Très peu d’esclaves furent recensés dans les provinces alpines et danubiennes, tandis que les Allemands apparaissent rarement, sauf parmi les gardes du corps impériaux. (L’auteur) Bang remarque que les Européens étaient de plus grand service à l’empire en tant que soldats et moins en tant que domestiques. »

Et plus tard il ajoute :

« Mais ce qui resta à l’arrière-plan et régit constamment sur toutes ces causes de la désintégration de Rome fut après tout le fait que les gens qui avaient construit Rome ont cédé leur place à un race différente. »

Les anciens Romains avaient une idée claire des différents tribus et peuples venus d’Orient à Rome. Comme l’écrit un autre auteur, « Les esclaves d’Asie mineure et les affranchis cariens, mysiens, phrygiens et cappadociens, à savoir les Orientaux, étaient, par rapport aux esclaves d’autres provinces, particulièrement méprisés dans la conscience romaine. Ces derniers sont même devenus proverbiaux à cause de leur méchanceté. »

En conclusion, on peut dire qu’une bonne conscience raciale ne signifie pas seulement une bonne connaissance des théories raciales ou pire encore la diffusion des insultes contre les non-Européens. Avoir la conscience raciale signifie tout d’abord avoir une bonne mémoire de la lignée commune et une bonne mémoire du destin commun. Cela a été le cas avec les tribus européennes et les peuples européens depuis la nuit des temps. Une fois l’héritage du peuple, y compris son hérédité, oublié ou compromis, la société commence à se désagréger comme on l’a vu à Rome et comme on le voit chaque jour en Europe aujourd’hui. « Les premiers Romains tenaient à leur lignée avec beaucoup de respect et appliquaient un système de connubium selon lequel ils ne pouvaient se marier qu’au sein de certains stocks approuvés » . Inutile de répéter comment on devrait appliquer le devoir de connubium en Europe parmi les jeunes Européens aujourd’hui. Voilà un exemple qui dépasse le cadre de notre discussion. Suite à la propagande hollywoodienne de longue haleine il est devenu à la mode chez de jeunes Blanches et Blancs de se lier avec un Noir ou un métis. Il s’agit rarement d’une question d’amour réciproque mais plutôt d’une mode provenant du renversement des valeurs traditionnelles.

Il est inutile de critiquer les effets du métissage sans en mentionner ses causes. De même on doit d’abord déchiffrer les causes de l’immigration non-européenne avant de critiquer ses effets. Certes, comme if fut déjà souligné la cause de la décadence réside dans l’oubli de la conscience raciale. Or celle-ci avait été soit affaiblie soit supprimée par le christianisme primitif dont les avatars séculiers se manifestent aujourd’hui dans l’idéologie de l’antifascisme et la montée de diverses sectes égalitaristes et mondialistes qui prêchent la fin de l’histoire dans une grande embrassade multiraciale et transsexuelle. Critiquer les dogmes chrétiens et leur visions œcuméniques vis-à-vis des immigrés est un sujet autrement plus explosif chez nos amis chrétiens traditionalistes et surtout chez nos amis d’Amérique, le pays où la Bible joue un rôle très important. Or faute de s’en prendre aux causes délétères de l’égalitarisme chrétien on va tourner en rond avec nos propos creux sur le mal libéral ou le mal communiste. On a beau critiquer les « antifas » ou bien le grand capital ou bien les banksters suisses et leurs manœuvres mondialistes, reste qu’aujourd’hui les plus farouches avocats de l’immigration non-européenne sont l’Église Catholique conciliaire et ses cardinaux en Allemagne et en Amérique. Roger Pearson, un sociobiologiste anglais de renom l’écrit . « Se répandant d’abord parmi les esclaves et les classes inférieures de l’empire romain, le christianisme a fini par enseigner que tous les hommes étaient égaux aux yeux d’un dieu créateur universel, une idée totalement étrangère à la pensée européenne… Puisque tous les hommes et toutes les femmes étaient les « enfants de Dieu », tous étaient égaux devant leur divin Créateur ! »

Si l’on veut tracer et combattre les racines de la décadence et ses effets qui se manifestent dans le multiculturalisme et le métissage, il nous faut nous pencher d’une manière critique sur les enseignements du christianisme primitif. Ce que l’on observe dans l’Occident d’aujourd’hui, submergé par des populations non-européennes, est le résultat final et logique de l’idée d’égalitarisme et de globalisme prêchée par le christianisme depuis deux mille ans.

Tomislav Sunic (Nous sommes partout, 16 octobre 2019)

Notes :

1/ Montesquieu, Considérations sur les causes de la grandeur des Romains et de leur décadence (Paris: Librairie Ch. Delagrave : 1891), Ch. IX, p. 85-86, où il cite Bossuet; “Le sénat se remplissait de barbares ; le sang romain se mêlait ; l’amour de la patrie, par lequel Rome s’était élevée au-dessus de tous les peuples du monde, n’était pas naturel à ces citoyens venus de dehors..” http://classiques.uqac.ca/classiques/montesquieu/consider...

T. Sunic, „Le bon truc; drogue et démocratie“, dans Chroniques des Temps Postmodernes ( Dublin, Paris: éd Avatar, 2014), pp 227-232. En anglais, „The Right Stuff; Drugs and Democracy“, in Postmortem Report; Cultural Examinations from Postmodernity ( London: Arktos, 2017), pp. 61-65.

Voir T. Sunic, « L’art dans le IIIème Reich », Ecrits de Paris, juillet—août 2002, nr. 645, Also “Art in the Third Reich: 1933-45”, in Postmortem Report ( London: Artkos, 2017) pp. 95-110.

Arthur de Gobineau, Essai sur l’inégalité des races humaines, (Paris: Éditions Pierre Belfond, 1967), Livres 1 à 4, pp. 58-59.

Ibid, Livres 5 à 6., p. 164. https://ia802900.us.archive.org/27/items/EssaiSurLinegali...

Salluste, Ouvres de Salluste, Conjuration de Catilina – ( Paris: C.L. F. Pancoucke, 1838), pp 17-18. https://ia802706.us.archive.org/5/items/uvresdesalluste00...

Satires de Juvénal et de Perse, Satire III, traduites en vers français par M. J . Lacroix (Paris : Firmin Didot frères Libraries, 1846), p. 47.

Ibid. p.43.

Ibid., p.165. Egalement sur le site: http://remacle.org/bloodwolf/satire/juvenal/satire3b.htm

“ L’homme le plus riche de Russie va rendre à James Watson sa médaille Nobel”, Le Figaro, le 10 Dec. 2014. http://www.lefigaro.fr/international/2014/12/10/01003-20141210ARTFIG00268-l-homme-le-plus-riche-de-russie-va-rendre-a-james-watson-sa-medaille-nobel.php

Tenney Frank, „Race Mixture in the Roman Empire“, The American Historical Review, Vol. XXI, Nr. 4, July 1916, p. 701.

Ibid. 705.

Heikki Solin, “Zur Herkunft der römischen Sklaven” https://www.academia.edu/10087127/Zur_Herkunft_der_r%C3%B6mischen_Sklaven

Roger Pearson, « Heredity in the History of Western Culture, » The Mankind Quarterly, XXXV. Nr. 3. printemps 1995, p. 233.

Ibid p. 234.

Ainsi, la paix est un idéal, et en tant que telle, comme l’espérance d’Epiméthée, elle mérite nos efforts plus que nos ricanements. Il est juste que nous fassions tout pour faire advenir une paix lucide, sachant bien qu’elle ne parviendra jamais à réalisation. En ce sens, l’aspiration à la société cosmopolite est une aspiration morale naturelle à l’humanité, et vouloir récuser cette aspiration au nom de la permanence des conflits serait vouloir retirer à l’homme la moitié de sa condition. En revanche, prétendre atteindre la société cosmopolite comme un programme, à travers la politique, serait susciter un mélange préjudiciable de la morale et de la politique. Parce que nous sommes des créatures politiques, nous devons savoir que la paix universelle n’est qu’un idéal et non une possibilité de réalisation. Parce que nous sommes des créatures morales, nous ne pouvons nous contenter benoîtement des conflits sans espérer jamais les réduire au maximum. Le « règne des fins » ne doit pas aller jusqu’à constituer une eschatologie politique (qui existe aussi bien dans le libéralisme que dans le marxisme, et que l’on trouve au XIX° siècle jusque chez Proudhon), parce qu’alors il suscite une sorte de crase dommageable et irréaliste entre la politique et la morale. Mais l’espérance du bien ne constitue pas seulement une sorte d’exutoire pour un homme malheureux parce qu’englué dans les exigences triviales d’un monde conflictuel : elle engage l’humanité à avancer sans cesse vers son idéal, et par là à améliorer son monde dans le sens qui lui paraît le meilleur, même si elle ne parvient jamais à réalisation complète.

Ainsi, la paix est un idéal, et en tant que telle, comme l’espérance d’Epiméthée, elle mérite nos efforts plus que nos ricanements. Il est juste que nous fassions tout pour faire advenir une paix lucide, sachant bien qu’elle ne parviendra jamais à réalisation. En ce sens, l’aspiration à la société cosmopolite est une aspiration morale naturelle à l’humanité, et vouloir récuser cette aspiration au nom de la permanence des conflits serait vouloir retirer à l’homme la moitié de sa condition. En revanche, prétendre atteindre la société cosmopolite comme un programme, à travers la politique, serait susciter un mélange préjudiciable de la morale et de la politique. Parce que nous sommes des créatures politiques, nous devons savoir que la paix universelle n’est qu’un idéal et non une possibilité de réalisation. Parce que nous sommes des créatures morales, nous ne pouvons nous contenter benoîtement des conflits sans espérer jamais les réduire au maximum. Le « règne des fins » ne doit pas aller jusqu’à constituer une eschatologie politique (qui existe aussi bien dans le libéralisme que dans le marxisme, et que l’on trouve au XIX° siècle jusque chez Proudhon), parce qu’alors il suscite une sorte de crase dommageable et irréaliste entre la politique et la morale. Mais l’espérance du bien ne constitue pas seulement une sorte d’exutoire pour un homme malheureux parce qu’englué dans les exigences triviales d’un monde conflictuel : elle engage l’humanité à avancer sans cesse vers son idéal, et par là à améliorer son monde dans le sens qui lui paraît le meilleur, même si elle ne parvient jamais à réalisation complète.

Dieses Buch enthält erstmals umfangreiche biografische Daten des Philoso-

Dieses Buch enthält erstmals umfangreiche biografische Daten des Philoso-

del.icio.us

del.icio.us

Digg

Digg

D’abord instituteur pour pallier la disparition brutale de son père, le germanophone Julien Freund se retrouve otage des Allemands en juillet 1940 avant de poursuivre ses études à l’Université de Strasbourg repliée à Clermond-Ferrand. Il entre dès janvier 1941 en résistance dans le réseau Libération, puis dans les Groupes francs de combat de Jacques Renouvin. Arrêté en juin 1942, il est détenu dans la forteresse de Sisteron d’où il s’évade deux ans plus tard. Il rejoint alors un maquis FTP (Francs-tireurs et partisans) de la Drôme. Il y découvre l’endoctrinement communiste et la bassesse humaine.

D’abord instituteur pour pallier la disparition brutale de son père, le germanophone Julien Freund se retrouve otage des Allemands en juillet 1940 avant de poursuivre ses études à l’Université de Strasbourg repliée à Clermond-Ferrand. Il entre dès janvier 1941 en résistance dans le réseau Libération, puis dans les Groupes francs de combat de Jacques Renouvin. Arrêté en juin 1942, il est détenu dans la forteresse de Sisteron d’où il s’évade deux ans plus tard. Il rejoint alors un maquis FTP (Francs-tireurs et partisans) de la Drôme. Il y découvre l’endoctrinement communiste et la bassesse humaine. La politique se fait sur le terrain, et non dans les divagations spéculatives (p. 13). » Il relève dans son étude remarquable sur la notion de décadence que « si les civilisations ne se valent pas, c’est que chacune repose sur une hiérarchie des valeurs qui lui est propre et qui est la résultante d’options plus ou moins conscientes concernant les investissements capables de stimuler leur énergie. Cette hiérarchie conditionne donc l’originalité de chaque civilisation. Reniant leur passé, les Européens se sont laissés imposer, par leurs intellectuels, l’idée que leur civilisation n’était sous aucun rapport supérieure aux autres et même qu’ils devraient battre leur coulpe pour avoir inventé le capitalisme, l’impérialisme, la bombe thermonucléaire, etc. Une fausse interprétation de la notion de tolérance a largement contribué à cette culpabilisation. En effet, ni les idées, ni les valeurs ne sont tolérantes. Refusant de reconnaître leur originalité, les Européens n’adhèrent plus aux valeurs dont ils sont porteurs, de sorte qu’ils sont en train de perdre l’esprit de leur culture et le dynamisme qui en découle. Si encore ils ne faisaient que récuser leurs philosophies du passé, mais ils sont en train d’étouffer le sens de la philosophie qu’ils ont développée durant des siècles. La confusion des valeurs et la crise spirituelle qui en est la conséquence en sont le pitoyable témoignage. L’égalitarisme ambiant les conduit jusqu’à oublier que la hiérarchie est consubstantielle à l’idée même de valeur (p. 364) ».

La politique se fait sur le terrain, et non dans les divagations spéculatives (p. 13). » Il relève dans son étude remarquable sur la notion de décadence que « si les civilisations ne se valent pas, c’est que chacune repose sur une hiérarchie des valeurs qui lui est propre et qui est la résultante d’options plus ou moins conscientes concernant les investissements capables de stimuler leur énergie. Cette hiérarchie conditionne donc l’originalité de chaque civilisation. Reniant leur passé, les Européens se sont laissés imposer, par leurs intellectuels, l’idée que leur civilisation n’était sous aucun rapport supérieure aux autres et même qu’ils devraient battre leur coulpe pour avoir inventé le capitalisme, l’impérialisme, la bombe thermonucléaire, etc. Une fausse interprétation de la notion de tolérance a largement contribué à cette culpabilisation. En effet, ni les idées, ni les valeurs ne sont tolérantes. Refusant de reconnaître leur originalité, les Européens n’adhèrent plus aux valeurs dont ils sont porteurs, de sorte qu’ils sont en train de perdre l’esprit de leur culture et le dynamisme qui en découle. Si encore ils ne faisaient que récuser leurs philosophies du passé, mais ils sont en train d’étouffer le sens de la philosophie qu’ils ont développée durant des siècles. La confusion des valeurs et la crise spirituelle qui en est la conséquence en sont le pitoyable témoignage. L’égalitarisme ambiant les conduit jusqu’à oublier que la hiérarchie est consubstantielle à l’idée même de valeur (p. 364) ».

Carl Schmitt and Leo Strauss are extremely popular in China, especially in Mainland China—this is no longer a secret in the Western academia. As early as 2003, Stanley Rosen had already told the Boston Globe that “A very, very significant circle of Strauss admirers has sprung up, of all places, China.”

Carl Schmitt and Leo Strauss are extremely popular in China, especially in Mainland China—this is no longer a secret in the Western academia. As early as 2003, Stanley Rosen had already told the Boston Globe that “A very, very significant circle of Strauss admirers has sprung up, of all places, China.”

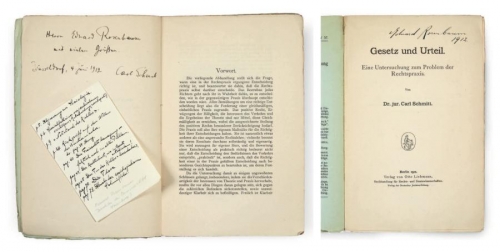

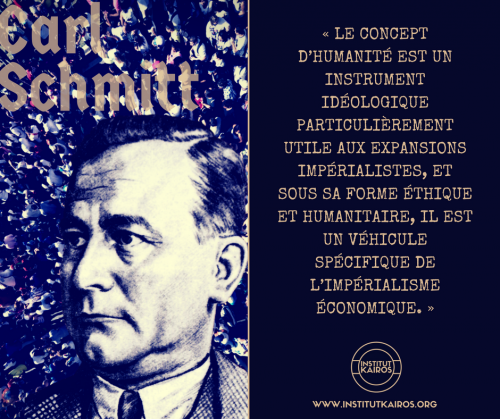

De l’œuvre proprement juridique du jeune Carl Schmitt, disons de celle d’avant 1933, nous possédons en français Théorie de la constitution (1928, PUF 1993), La valeur de l’État et la signification de l’individu (1914, Droz 2003), mais il nous manquait jusqu’aujourd’hui un petit ouvrage de 1912 intitulé Loi et jugement, qu’Olivier Beaud, dans sa préface à l’édition française de Théorie de la constitution, qualifie de « véritable recherche de théorie du droit abordant les questions les plus fondamentales ».

De l’œuvre proprement juridique du jeune Carl Schmitt, disons de celle d’avant 1933, nous possédons en français Théorie de la constitution (1928, PUF 1993), La valeur de l’État et la signification de l’individu (1914, Droz 2003), mais il nous manquait jusqu’aujourd’hui un petit ouvrage de 1912 intitulé Loi et jugement, qu’Olivier Beaud, dans sa préface à l’édition française de Théorie de la constitution, qualifie de « véritable recherche de théorie du droit abordant les questions les plus fondamentales ».

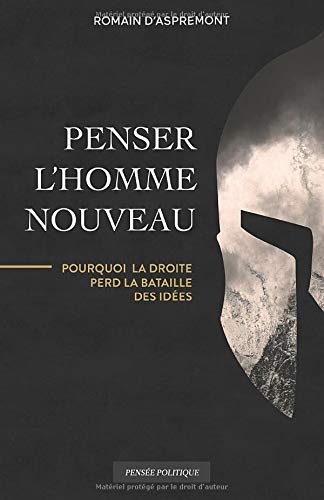

La seconde raison de la défaite perpétuelle de la droite, c’est son conservatisme. Les réaco-conservateurs assimilent trop souvent l’avenir à un déploiement inéluctable des forces progressistes. Ils en viennent à prendre l’objet (l’avenir) façonné par le sujet (la gauche) pour le sujet lui-même. Le futur étant devenu synonyme d’avancées « progressistes », l’unique remède ne pourrait être que son contraire – le passé – plutôt qu’un avenir alternatif. Or il y a là une forme de défaitisme, comme si la droite assimilait sa propre déconfiture, ratifiant le monopole de la gauche sur l’avenir. Puisque l’Histoire n’est qu’une longue série de victoires progressistes, c’est l’avenir lui-même qu’il faudrait brider, plutôt que les acteurs qui le façonnent. Ralentir le temps et sanctuariser certaines institutions apparaît alors comme la solution par défaut.

La seconde raison de la défaite perpétuelle de la droite, c’est son conservatisme. Les réaco-conservateurs assimilent trop souvent l’avenir à un déploiement inéluctable des forces progressistes. Ils en viennent à prendre l’objet (l’avenir) façonné par le sujet (la gauche) pour le sujet lui-même. Le futur étant devenu synonyme d’avancées « progressistes », l’unique remède ne pourrait être que son contraire – le passé – plutôt qu’un avenir alternatif. Or il y a là une forme de défaitisme, comme si la droite assimilait sa propre déconfiture, ratifiant le monopole de la gauche sur l’avenir. Puisque l’Histoire n’est qu’une longue série de victoires progressistes, c’est l’avenir lui-même qu’il faudrait brider, plutôt que les acteurs qui le façonnent. Ralentir le temps et sanctuariser certaines institutions apparaît alors comme la solution par défaut.

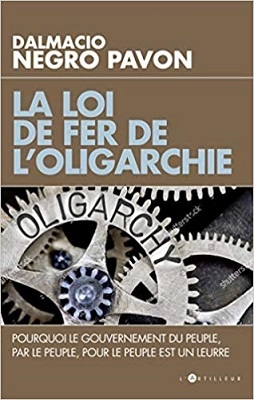

Sourcé et documenté, mais en même temps décapant sans concessions et affranchi de tous les conventionnalismes, ce livre atypique sort résolument des sentiers battus de l’histoire des idées politiques. Son auteur, Dalmacio Negro Pavón, politologue renommé dans le monde hispanique, est au nombre de ceux qui incarnent le mieux la tradition académique européenne, celle d’une époque où le politiquement correct n’avait pas encore fait ses ravages, et où la majorité des universitaires adhéraient avec conviction, – et non par opportunisme comme si souvent aujourd’hui -, aux valeurs scientifiques de rigueur, de probité et d’intégrité. Que nous dit-il ? Résumons-le en puisant largement dans ses analyses, ses propos et ses termes:

Sourcé et documenté, mais en même temps décapant sans concessions et affranchi de tous les conventionnalismes, ce livre atypique sort résolument des sentiers battus de l’histoire des idées politiques. Son auteur, Dalmacio Negro Pavón, politologue renommé dans le monde hispanique, est au nombre de ceux qui incarnent le mieux la tradition académique européenne, celle d’une époque où le politiquement correct n’avait pas encore fait ses ravages, et où la majorité des universitaires adhéraient avec conviction, – et non par opportunisme comme si souvent aujourd’hui -, aux valeurs scientifiques de rigueur, de probité et d’intégrité. Que nous dit-il ? Résumons-le en puisant largement dans ses analyses, ses propos et ses termes: Une révolution a besoin de dirigeants, mais l’étatisme a infantilisé la conscience des Européens. Celle-ci a subi une telle contagion que l’émergence de véritables dirigeants est devenue quasiment impossible et que lorsqu’elle se produit, la méfiance empêche de les suivre. Mieux vaut donc, une fois parvenu à ce stade, faire confiance au hasard, à l’ennui ou à l’humour, autant de forces historiques majeures, auxquelles on n’accorde pas suffisamment d’attention parce qu’elles sont cachées derrière le paravent de l’enthousiasme progressiste.

Une révolution a besoin de dirigeants, mais l’étatisme a infantilisé la conscience des Européens. Celle-ci a subi une telle contagion que l’émergence de véritables dirigeants est devenue quasiment impossible et que lorsqu’elle se produit, la méfiance empêche de les suivre. Mieux vaut donc, une fois parvenu à ce stade, faire confiance au hasard, à l’ennui ou à l’humour, autant de forces historiques majeures, auxquelles on n’accorde pas suffisamment d’attention parce qu’elles sont cachées derrière le paravent de l’enthousiasme progressiste. Le réaliste authentique affirme que la finalité propre à la politique est le bien commun, mais il reconnait la nécessité vitale des finalités non politiques(le bonheur et la justice). La politique est selon lui au service de l’homme. La mission de la politique n’est pas de changer l’homme ou de le rendre meilleur (ce qui est le chemin des totalitarismes), mais d’organiser les conditions de la coexistence humaine, de mettre en forme la collectivité, d’assurer la concorde intérieure et la sécurité extérieure. Voilà pourquoi les conflits doivent être, selon lui, canalisés, réglementés, institutionnalisés et autant que possible résolus sans violence.

Le réaliste authentique affirme que la finalité propre à la politique est le bien commun, mais il reconnait la nécessité vitale des finalités non politiques(le bonheur et la justice). La politique est selon lui au service de l’homme. La mission de la politique n’est pas de changer l’homme ou de le rendre meilleur (ce qui est le chemin des totalitarismes), mais d’organiser les conditions de la coexistence humaine, de mettre en forme la collectivité, d’assurer la concorde intérieure et la sécurité extérieure. Voilà pourquoi les conflits doivent être, selon lui, canalisés, réglementés, institutionnalisés et autant que possible résolus sans violence.

Cela étant, on peut être un sceptique ou un pessimiste lucide mais refuser pour autant de désespérer. On ne peut pas éliminer les oligarchies. Soit ! Mais, comme nous le dit Dalmacio Negro Pavón, il y a des régimes politiques qui sont plus ou moins capables d’en mitiger les effets et de les contrôler. Le nœud de la question est d’empêcher que les détenteurs du pouvoir ne soient que de simples courroies de transmission des intérêts, des désirs et des sentiments de l’oligarchie politique, sociale, économique et culturelle. Les hommes craignent toujours le pouvoir auquel ils sont soumis, mais le pouvoir qui les soumet craint lui aussi toujours la collectivité sur laquelle il règne. Et il existe une condition essentielle pour que la démocratie politique soit possible et que sa corruption devienne beaucoup plus difficile sinon impossible, souligne encore Dalmacio Negro Pavón. Il faut que l’attitude à l’égard du gouvernement soit toujours méfiante, même lorsqu’il s’agit d’amis ou de personnes pour lesquelles on a voté. Bertrand de Jouvenel disait à ce propos très justement: « le gouvernement des amis est la manière barbare de gouverner ».

Cela étant, on peut être un sceptique ou un pessimiste lucide mais refuser pour autant de désespérer. On ne peut pas éliminer les oligarchies. Soit ! Mais, comme nous le dit Dalmacio Negro Pavón, il y a des régimes politiques qui sont plus ou moins capables d’en mitiger les effets et de les contrôler. Le nœud de la question est d’empêcher que les détenteurs du pouvoir ne soient que de simples courroies de transmission des intérêts, des désirs et des sentiments de l’oligarchie politique, sociale, économique et culturelle. Les hommes craignent toujours le pouvoir auquel ils sont soumis, mais le pouvoir qui les soumet craint lui aussi toujours la collectivité sur laquelle il règne. Et il existe une condition essentielle pour que la démocratie politique soit possible et que sa corruption devienne beaucoup plus difficile sinon impossible, souligne encore Dalmacio Negro Pavón. Il faut que l’attitude à l’égard du gouvernement soit toujours méfiante, même lorsqu’il s’agit d’amis ou de personnes pour lesquelles on a voté. Bertrand de Jouvenel disait à ce propos très justement: « le gouvernement des amis est la manière barbare de gouverner ».

« Pour une évidente clarté sémantique, écrit-il, il serait préférable de laisser au Flamby normal, à l’exquise Najat Vallaud-Belkacem et aux morts vivants du siège vendu, rue de Solférino, ce mot de socialiste et d’en trouver un autre plus pertinent. Sachant que travaillisme risquerait de susciter les mêmes confusions lexicales, les termes de solidarisme ou, pourquoi pas, celui de justicialisme, directement venu de l’Argentine péronisme, seraient bien plus appropriés. »

« Pour une évidente clarté sémantique, écrit-il, il serait préférable de laisser au Flamby normal, à l’exquise Najat Vallaud-Belkacem et aux morts vivants du siège vendu, rue de Solférino, ce mot de socialiste et d’en trouver un autre plus pertinent. Sachant que travaillisme risquerait de susciter les mêmes confusions lexicales, les termes de solidarisme ou, pourquoi pas, celui de justicialisme, directement venu de l’Argentine péronisme, seraient bien plus appropriés. »

Me relisant, je me dis que j'ai finalement du mérite à m'être en fin de compte plongé dans la lecture de l'ouvrage de François Bousquet dont on ne pourra guère m'accuser, du coup, de vanter louchement les mérites qui, sans être absolument admirables ni même originaux, n'en sont pas moins bien réels : mes préventions, toujours, tombent devant ma curiosité, ma faim ogresque de lectures, et ce n'est que fort normal.

Me relisant, je me dis que j'ai finalement du mérite à m'être en fin de compte plongé dans la lecture de l'ouvrage de François Bousquet dont on ne pourra guère m'accuser, du coup, de vanter louchement les mérites qui, sans être absolument admirables ni même originaux, n'en sont pas moins bien réels : mes préventions, toujours, tombent devant ma curiosité, ma faim ogresque de lectures, et ce n'est que fort normal.

Reste une autre solution, plus fictionnelle, donc métapolitique, que réellement, modestement politique, sur le papier en tout cas ne souffrant point l'endogamie propre à l'élite française, de droite comme de gauche, solution purement romanesque qu'explore Bruno de Cessole dans son dernier livre, L'Île du dernier homme, et que nous pourrions du reste je crois sans trop de mal rapprocher de la vision de l'Islam développée depuis quelques années par Marc-Édouard Nabe, consistant à trouver, dans la vitalité incontestable des nouveaux Barbares, le sang nécessaire pour irriguer la vieille pompe à bout de force d'un Occident en déclin, d'une France complètement vidée de sa substance, d'un arbre, si cher au

Reste une autre solution, plus fictionnelle, donc métapolitique, que réellement, modestement politique, sur le papier en tout cas ne souffrant point l'endogamie propre à l'élite française, de droite comme de gauche, solution purement romanesque qu'explore Bruno de Cessole dans son dernier livre, L'Île du dernier homme, et que nous pourrions du reste je crois sans trop de mal rapprocher de la vision de l'Islam développée depuis quelques années par Marc-Édouard Nabe, consistant à trouver, dans la vitalité incontestable des nouveaux Barbares, le sang nécessaire pour irriguer la vieille pompe à bout de force d'un Occident en déclin, d'une France complètement vidée de sa substance, d'un arbre, si cher au

La deuxième partie de l'ouvrage est consacrée aux textes proprement dits de Carl Schmitt mais il faut attendre la page 157 de l'ouvrage, dans une étude intitulée Catholicisme romain et forme politique datant de 1923, pour que le nom de Cortés apparaisse, d'ailleurs de façon tout à fait anecdotique. Cette étude, plus ample que la première, intitulée Visibilité de l’Église et qui ne nous intéresse que par sa mention d'une paradoxale quoique rigoureuse légalité du Diable (4), mentionne donc le nom de l'essayiste espagnol et, ô surprise, celui d'Ernest Hello (cf. p. 180) mais, plus qu'une approche de Cortés, elle s'intéresse à l'absence de toute forme de représentation symbolique dans le monde technico-économique contemporain, à la différence de ce qui se produisait dans la société occidentale du Moyen Âge. Alors, la représentation, ce que nous pourrions sans trop de mal je crois appeler la visibilité au sens que Schmitt donne à ce mot, conférait «à la personne du représentant une dignité propre, car le représentant d'une valeur élevée ne [pouvait] être dénué de valeur» tandis que, désormais, «on ne peut pas représenter devant des automates ou des machines, aussi peu qu'eux-mêmes ne peuvent représenter ou être représentés» car, si l’État «est devenu Léviathan, c'est qu'il a disparu du monde du représentatif». Carl Schmitt fait ainsi remarquer que «l'absence d'image et de représentation de l'entreprise moderne va chercher ses symboles dans une autre époque, car la machine est sans tradition, et elle est si peu capable d'images que même la République russe des soviets n'a pas trouvé d'autre symbole», pour l'illustration de ce que nous pourrions considérer comme étant ses armoiries, «que la faucille et le marteau» (p. 170). Suit une très belle analyse de la rhétorique de Bossuet, qualifiée de «discours représentatif» qui «ne passe pas son temps à discuter et à raisonner» et qui est plus que de la musique : «elle est une dignité humaine rendue visible par la rationalité du langage qui se forme», ce qui suppose «une hiérarchie, car la résonance spirituelle de la grande rhétorique procède de la foi en la représentation que revendique l'orateur» (p. 172), autrement dit un monde supérieur garant de celui où faire triompher un discours qui s'ente lui-même sur la Parole. Le décisionnisme, vu de cette manière, pourrait n'être qu'un pis-aller, une tentative, sans doute désespérée, de fonder ex abrupto une légitimité en prenant de vitesse l'ennemi qui, lui, n'aura pas su ou voulu tirer les conséquences de la mort de Dieu dans l'hic et nunc d'un monde quadrillé et soumis par la Machine, fruit tavelé d'une Raison devenue folle et tournant à vide. Il y a donc quelque chose de prométhéen dans la décision radicale de celui qui décide d'imposer sa vision du monde, dictateur ou empereur-Dieu régnant sur le désert qu'est la réalité profonde du monde moderne.

La deuxième partie de l'ouvrage est consacrée aux textes proprement dits de Carl Schmitt mais il faut attendre la page 157 de l'ouvrage, dans une étude intitulée Catholicisme romain et forme politique datant de 1923, pour que le nom de Cortés apparaisse, d'ailleurs de façon tout à fait anecdotique. Cette étude, plus ample que la première, intitulée Visibilité de l’Église et qui ne nous intéresse que par sa mention d'une paradoxale quoique rigoureuse légalité du Diable (4), mentionne donc le nom de l'essayiste espagnol et, ô surprise, celui d'Ernest Hello (cf. p. 180) mais, plus qu'une approche de Cortés, elle s'intéresse à l'absence de toute forme de représentation symbolique dans le monde technico-économique contemporain, à la différence de ce qui se produisait dans la société occidentale du Moyen Âge. Alors, la représentation, ce que nous pourrions sans trop de mal je crois appeler la visibilité au sens que Schmitt donne à ce mot, conférait «à la personne du représentant une dignité propre, car le représentant d'une valeur élevée ne [pouvait] être dénué de valeur» tandis que, désormais, «on ne peut pas représenter devant des automates ou des machines, aussi peu qu'eux-mêmes ne peuvent représenter ou être représentés» car, si l’État «est devenu Léviathan, c'est qu'il a disparu du monde du représentatif». Carl Schmitt fait ainsi remarquer que «l'absence d'image et de représentation de l'entreprise moderne va chercher ses symboles dans une autre époque, car la machine est sans tradition, et elle est si peu capable d'images que même la République russe des soviets n'a pas trouvé d'autre symbole», pour l'illustration de ce que nous pourrions considérer comme étant ses armoiries, «que la faucille et le marteau» (p. 170). Suit une très belle analyse de la rhétorique de Bossuet, qualifiée de «discours représentatif» qui «ne passe pas son temps à discuter et à raisonner» et qui est plus que de la musique : «elle est une dignité humaine rendue visible par la rationalité du langage qui se forme», ce qui suppose «une hiérarchie, car la résonance spirituelle de la grande rhétorique procède de la foi en la représentation que revendique l'orateur» (p. 172), autrement dit un monde supérieur garant de celui où faire triompher un discours qui s'ente lui-même sur la Parole. Le décisionnisme, vu de cette manière, pourrait n'être qu'un pis-aller, une tentative, sans doute désespérée, de fonder ex abrupto une légitimité en prenant de vitesse l'ennemi qui, lui, n'aura pas su ou voulu tirer les conséquences de la mort de Dieu dans l'hic et nunc d'un monde quadrillé et soumis par la Machine, fruit tavelé d'une Raison devenue folle et tournant à vide. Il y a donc quelque chose de prométhéen dans la décision radicale de celui qui décide d'imposer sa vision du monde, dictateur ou empereur-Dieu régnant sur le désert qu'est la réalité profonde du monde moderne. Voilà bien ce qui fascine Carl Schmitt lorsqu'il lit la prose de Donoso Cortés, éblouissante de virtuosité comme a pu le remarquer, selon lui et «avec un jugement critique sûr» (p. 217), un Barbey d'Aurevilly : son intransigeance radicale, non pas certes sur les arrangements circonstanciels politiques, car il fut un excellent diplomate, que sur la nécessité, pour le temps qui vient, de prendre les décisions qui s'imposent, aussi dures qu'elles puissent paraître, Carl Schmitt faisant à ce titre remarquer que Donoso Cortés est l'auteur de «la phrase la plus extrême du XIXe siècle : le jour des anéantissements [ou plutôt : des négations] radicaux et des affirmations souveraines arrive», «llega el dia de las negaciones radicales y des las afirmaciones soberanas» (p. 218), une phrase dont chacun des termes est bien évidemment plus que jamais valable à notre époque, mais qui est devenue parfaitement inaudible.

Voilà bien ce qui fascine Carl Schmitt lorsqu'il lit la prose de Donoso Cortés, éblouissante de virtuosité comme a pu le remarquer, selon lui et «avec un jugement critique sûr» (p. 217), un Barbey d'Aurevilly : son intransigeance radicale, non pas certes sur les arrangements circonstanciels politiques, car il fut un excellent diplomate, que sur la nécessité, pour le temps qui vient, de prendre les décisions qui s'imposent, aussi dures qu'elles puissent paraître, Carl Schmitt faisant à ce titre remarquer que Donoso Cortés est l'auteur de «la phrase la plus extrême du XIXe siècle : le jour des anéantissements [ou plutôt : des négations] radicaux et des affirmations souveraines arrive», «llega el dia de las negaciones radicales y des las afirmaciones soberanas» (p. 218), une phrase dont chacun des termes est bien évidemment plus que jamais valable à notre époque, mais qui est devenue parfaitement inaudible.

Dr Giles Fraser is a journalist, broadcaster and Rector at the south London church of St Mary’s, Newington

Dr Giles Fraser is a journalist, broadcaster and Rector at the south London church of St Mary’s, Newington Top spot must go to

Top spot must go to  Second spot is shared by two very contrasting approaches, one from the Right and one from the Left.

Second spot is shared by two very contrasting approaches, one from the Right and one from the Left.  The Road to Somewhere by David Goodhart

The Road to Somewhere by David Goodhart The defence of these ‘somewheres’, often derided as small-town, small-minded ‘deplorables’ is vividly captured by

The defence of these ‘somewheres’, often derided as small-town, small-minded ‘deplorables’ is vividly captured by

But despite the fact that many people exist within the quadrant it describes (Left on economics, Right on culture) it is still struggling to break through. It’s perhaps because it’s easier for the Right to break Left on economics than for the Left to break Right on culture — which is why the Conservative and Republican parties may be more amenable to this sort of thinking than their opponents. But even this is not a natural fit. Which brings us back to Gramsci. The old is dead. The new is yet to be born.

But despite the fact that many people exist within the quadrant it describes (Left on economics, Right on culture) it is still struggling to break through. It’s perhaps because it’s easier for the Right to break Left on economics than for the Left to break Right on culture — which is why the Conservative and Republican parties may be more amenable to this sort of thinking than their opponents. But even this is not a natural fit. Which brings us back to Gramsci. The old is dead. The new is yet to be born.

... Cette revue de détail nécessairement partielle et non limitative ne signifie en aucune façon qu’il existe, ou que va se créer un front vraiment “marxiste” contre le gauchisme-sociétal qu’on a tendance à assimiler au “marxisme culturel” pour le marier encore plus aisément à l’hypercapitalisme. (Leur “marxisme culturel” est un “marxisme de spectacle”, comme il y a la “société de spectacle” de Debord.) Seule importe cette position d'opposition très diverse à la passion fusionnelle capitalisme-gauchisme-sociétal, comme un socle continuel de critique, de mise en évidence et de dénonciation du simulacre capitalisme-gauchisme-sociétal.

... Cette revue de détail nécessairement partielle et non limitative ne signifie en aucune façon qu’il existe, ou que va se créer un front vraiment “marxiste” contre le gauchisme-sociétal qu’on a tendance à assimiler au “marxisme culturel” pour le marier encore plus aisément à l’hypercapitalisme. (Leur “marxisme culturel” est un “marxisme de spectacle”, comme il y a la “société de spectacle” de Debord.) Seule importe cette position d'opposition très diverse à la passion fusionnelle capitalisme-gauchisme-sociétal, comme un socle continuel de critique, de mise en évidence et de dénonciation du simulacre capitalisme-gauchisme-sociétal.

Avant que l’on commence à se lamenter sur les décadences décrites par nos ancêtres romains et jusque par nos auteurs contemporains, et quelle que soit l’appellation qui leur fut attribuée par les critiques modernes, « nationalistes », « identitaires », « traditionalistes de la droite alternative, » « de la droite extrême » et j’en passe, il est essentiel de mentionner deux écrivains modernes qui signalèrent l’arrivée de la décadence bien que leur approche respective de son contenu et de ses causes fut très divergente. Ce sont l’Allemand Oswald Spengler avec son Déclin de l’Occident, écrit au début du XXème siècle, et le Français Arthur de Gobineau avec son gros ouvrage Essai sur l’inégalité des races humaines, écrit soixante ans plut tôt. Tous deux étaient des écrivains d’une grande culture, tous deux partageaient la même vision apocalyptique de l’Europe à venir, tous deux peuvent être appelés des pessimistes culturels avec un sens du tragique fort raffiné. Or pour le premier de ces auteurs, Spengler, la décadence est le résultat du vieillissement biologique naturel de chaque peuple sur terre, vieillissement qui l’amène à un moment historique à sa mort inévitable. Pour le second, Gobineau, la décadence est due à l’affaiblissement de la conscience raciale qui fait qu’un peuple adopte le faux altruisme tout en ouvrant les portes de la cité aux anciens ennemis, c’est-à-dire aux Autres d’une d’autre race, ce qui le conduit peu à peu à s’adonner au métissage et finalement à accepter sa propre mort. À l’instar de Gobineau, des observations à peu près similaires seront faites par des savants allemands entre les deux guerres. On doit pourtant faire ici une nette distinction entre les causes et les effets de la décadence. Le tedium vitae (fatigue de vivre), la corruption des mœurs, la débauche, l’avarice, ne sont que les effets de la disparition de la conscience raciale et non sa cause. Le mélange des races et le métissage, termes mal vus aujourd’hui par le Système et ses serviteurs, étaient désignés par Gobineau par le terme de « dégénérescence ». Selon lui, celle-ci fonctionne dorénavant, comme une machine à broyer le patrimoine génétique des peuples européens. Voici une courte citation de son livre : « Je pense donc que le mot dégénéré, s’appliquant à un peuple, doit signifier et signifie que ce peuple n’a plus la valeur intrinsèque qu’autrefois il possédait, parce qu’il n’a plus dans ses veines le même sang, dont des alliages successifs ont graduellement modifié la valeur ; autrement dit, qu’avec le même nom, il n’a pas conservé la même race que ses fondateurs ; enfin, que l’homme de la décadence, celui qu’on appelle l’homme dégénéré, est un produit différent, au point de vue ethnique, du héros des grandes époques. »

Avant que l’on commence à se lamenter sur les décadences décrites par nos ancêtres romains et jusque par nos auteurs contemporains, et quelle que soit l’appellation qui leur fut attribuée par les critiques modernes, « nationalistes », « identitaires », « traditionalistes de la droite alternative, » « de la droite extrême » et j’en passe, il est essentiel de mentionner deux écrivains modernes qui signalèrent l’arrivée de la décadence bien que leur approche respective de son contenu et de ses causes fut très divergente. Ce sont l’Allemand Oswald Spengler avec son Déclin de l’Occident, écrit au début du XXème siècle, et le Français Arthur de Gobineau avec son gros ouvrage Essai sur l’inégalité des races humaines, écrit soixante ans plut tôt. Tous deux étaient des écrivains d’une grande culture, tous deux partageaient la même vision apocalyptique de l’Europe à venir, tous deux peuvent être appelés des pessimistes culturels avec un sens du tragique fort raffiné. Or pour le premier de ces auteurs, Spengler, la décadence est le résultat du vieillissement biologique naturel de chaque peuple sur terre, vieillissement qui l’amène à un moment historique à sa mort inévitable. Pour le second, Gobineau, la décadence est due à l’affaiblissement de la conscience raciale qui fait qu’un peuple adopte le faux altruisme tout en ouvrant les portes de la cité aux anciens ennemis, c’est-à-dire aux Autres d’une d’autre race, ce qui le conduit peu à peu à s’adonner au métissage et finalement à accepter sa propre mort. À l’instar de Gobineau, des observations à peu près similaires seront faites par des savants allemands entre les deux guerres. On doit pourtant faire ici une nette distinction entre les causes et les effets de la décadence. Le tedium vitae (fatigue de vivre), la corruption des mœurs, la débauche, l’avarice, ne sont que les effets de la disparition de la conscience raciale et non sa cause. Le mélange des races et le métissage, termes mal vus aujourd’hui par le Système et ses serviteurs, étaient désignés par Gobineau par le terme de « dégénérescence ». Selon lui, celle-ci fonctionne dorénavant, comme une machine à broyer le patrimoine génétique des peuples européens. Voici une courte citation de son livre : « Je pense donc que le mot dégénéré, s’appliquant à un peuple, doit signifier et signifie que ce peuple n’a plus la valeur intrinsèque qu’autrefois il possédait, parce qu’il n’a plus dans ses veines le même sang, dont des alliages successifs ont graduellement modifié la valeur ; autrement dit, qu’avec le même nom, il n’a pas conservé la même race que ses fondateurs ; enfin, que l’homme de la décadence, celui qu’on appelle l’homme dégénéré, est un produit différent, au point de vue ethnique, du héros des grandes époques. »  L’écrivain Salluste est important à plusieurs titres. Primo, il fut le contemporain de la conjuration de Catilina, un noble romain ambitieux qui avec nombre de ses consorts de la noblesse décadente de Rome faillit renverser la république romaine et imposer la dictature. Salluste fut partisan de Jules César qui était devenu le dictateur auto-proclamé de Rome suite aux interminables guerres civiles qui avaient appauvri le fonds génétique de nombreux patriciens romains à Rome.

L’écrivain Salluste est important à plusieurs titres. Primo, il fut le contemporain de la conjuration de Catilina, un noble romain ambitieux qui avec nombre de ses consorts de la noblesse décadente de Rome faillit renverser la république romaine et imposer la dictature. Salluste fut partisan de Jules César qui était devenu le dictateur auto-proclamé de Rome suite aux interminables guerres civiles qui avaient appauvri le fonds génétique de nombreux patriciens romains à Rome. Nul doute que la crainte de l’Autre, qu’elle soit réelle ou factice, resserre les rangs d’un peuple, tout en fortifiant son homogénéité raciale et son identité culturelle. En revanche, il y a un effet négatif de la crainte des autres que l’on pouvait observer dans la Rome impériale et qu’on lit dans les écrits de Juvénal. Le sommet de l’amour des autres, ( l’ amor hostilis) ne se verra que vers la fin du XXème siècle en Europe multiculturelle. Suite à l’opulence matérielle et à la dictature du bien-être, accompagnées par la croyance à la fin de l’histoire véhiculée par les dogmes égalitaristes, on commence en Europe, peu à peu, à s’adapter aux mœurs et aux habitudes des Autres. Autrefois c’étaient Phéniciens, Juifs, Berbères, Numides, Parthes et Maghrébins et autres, combattus à l’époque romaine comme des ennemis héréditaires. Aujourd’hui, face aux nouveaux migrants non-européens, l’ancienne peur de l’Autre se manifeste chez les Blancs européens dans le mimétisme de l’altérité négative qui aboutit en règle générale à l’apprentissage du « déni de soi ». Ce déni de soi, on l’observe aujourd’hui dans la classe politique européenne et américaine à la recherche d’un ersatz pour son identité raciale blanche qui est aujourd’hui mal vue. A titre d’exemple cette nouvelle identité négative qu’on observe chez les gouvernants occidentaux modernes se manifeste par un dédoublement imitatif des mœurs des immigrés afro-asiatiques. On est également témoin de l’apprentissage de l’identité négative chez beaucoup de jeunes Blancs en train de mimer différents cultes non-européens. De plus, le renversement de la notion de « metus hostilis » en « amor hostilis » par les gouvernants européens actuels aboutit fatalement à la culture de la pénitence politique. Cette manie nationale-masochiste est surtout visible chez les actuels dirigeants allemands qui se lancent dans de grandes embrassades névrotiques avec des ressortissants afro-asiatiques et musulmans contre lesquels ils avaient mené des guerres meurtrières du VIIIe siècle dans l’Ouest européen et jusqu’au XVIIIe siècle dans l’Est européen.

Nul doute que la crainte de l’Autre, qu’elle soit réelle ou factice, resserre les rangs d’un peuple, tout en fortifiant son homogénéité raciale et son identité culturelle. En revanche, il y a un effet négatif de la crainte des autres que l’on pouvait observer dans la Rome impériale et qu’on lit dans les écrits de Juvénal. Le sommet de l’amour des autres, ( l’ amor hostilis) ne se verra que vers la fin du XXème siècle en Europe multiculturelle. Suite à l’opulence matérielle et à la dictature du bien-être, accompagnées par la croyance à la fin de l’histoire véhiculée par les dogmes égalitaristes, on commence en Europe, peu à peu, à s’adapter aux mœurs et aux habitudes des Autres. Autrefois c’étaient Phéniciens, Juifs, Berbères, Numides, Parthes et Maghrébins et autres, combattus à l’époque romaine comme des ennemis héréditaires. Aujourd’hui, face aux nouveaux migrants non-européens, l’ancienne peur de l’Autre se manifeste chez les Blancs européens dans le mimétisme de l’altérité négative qui aboutit en règle générale à l’apprentissage du « déni de soi ». Ce déni de soi, on l’observe aujourd’hui dans la classe politique européenne et américaine à la recherche d’un ersatz pour son identité raciale blanche qui est aujourd’hui mal vue. A titre d’exemple cette nouvelle identité négative qu’on observe chez les gouvernants occidentaux modernes se manifeste par un dédoublement imitatif des mœurs des immigrés afro-asiatiques. On est également témoin de l’apprentissage de l’identité négative chez beaucoup de jeunes Blancs en train de mimer différents cultes non-européens. De plus, le renversement de la notion de « metus hostilis » en « amor hostilis » par les gouvernants européens actuels aboutit fatalement à la culture de la pénitence politique. Cette manie nationale-masochiste est surtout visible chez les actuels dirigeants allemands qui se lancent dans de grandes embrassades névrotiques avec des ressortissants afro-asiatiques et musulmans contre lesquels ils avaient mené des guerres meurtrières du VIIIe siècle dans l’Ouest européen et jusqu’au XVIIIe siècle dans l’Est européen. Les lignes de Juvénal sont écrites en hexamètres dactyliques ce qui veut dire en gros un usage d’échanges rythmiques entre syllabes brèves ou longues qui fournissent à chacune de ses satires une tonalité dramatique et théâtrale qui était très à la mode chez les Anciens y compris chez Homère dans ses épopées. À l’hexamètre latin, le traducteur français a substitué les mètres syllabiques rimés qui ont fort bien capturé le sarcasme désabusé de l’original de Juvénal. On est tenté de qualifier Juvénal de Louis Ferdinand Céline de l‘Antiquité. Dans sa fameuse VIème satire, qui s’intitule Les Femmes, Juvénal décrit la prolifération de charlatans venus à Rome d’Asie et d’Orient et qui introduisent dans les mœurs romaines la mode de la zoophilie et de la pédophilie et d’autres vices. Le langage de Juvénal décrivant les perversions sexuelles importées à Rome par des nouveaux venues asiatiques et africains ferait même honte aux producteurs d’Hollywood aujourd’hui. Voici quelques-uns de ses vers traduits en français, de manière soignés car destinés aujourd’hui au grand public :

Les lignes de Juvénal sont écrites en hexamètres dactyliques ce qui veut dire en gros un usage d’échanges rythmiques entre syllabes brèves ou longues qui fournissent à chacune de ses satires une tonalité dramatique et théâtrale qui était très à la mode chez les Anciens y compris chez Homère dans ses épopées. À l’hexamètre latin, le traducteur français a substitué les mètres syllabiques rimés qui ont fort bien capturé le sarcasme désabusé de l’original de Juvénal. On est tenté de qualifier Juvénal de Louis Ferdinand Céline de l‘Antiquité. Dans sa fameuse VIème satire, qui s’intitule Les Femmes, Juvénal décrit la prolifération de charlatans venus à Rome d’Asie et d’Orient et qui introduisent dans les mœurs romaines la mode de la zoophilie et de la pédophilie et d’autres vices. Le langage de Juvénal décrivant les perversions sexuelles importées à Rome par des nouveaux venues asiatiques et africains ferait même honte aux producteurs d’Hollywood aujourd’hui. Voici quelques-uns de ses vers traduits en français, de manière soignés car destinés aujourd’hui au grand public :